This tutorial will help you to install Apache Kafka on Ubuntu 18.04, and Ubuntu 16.04 Linux systems.

Step 1 – Install Java

Apache Kafka required Java to run. You must have java installed on your system. Execute the below command to install default OpenJDK on your system from the official PPA’s. You can also install the specific version of from here.

Step 2 – Download Apache Kafka

Download the Apache Kafka binary files from its official download website. You can also select any nearby mirror to download. Then extract the archive file

Step 3 – Setup Kafka Systemd Unit Files

Next, create systemd unit files for the Zookeeper and Kafka service. This will help to manage Kafka services to start/stop using the systemctl command. First, create systemd unit file for Zookeeper with below command: Add below content: Save the file and close it. Next, to create a Kafka systemd unit file using the following command: Add the below content. Make sure to set the correct JAVA_HOME path as per the Java installed on your system. Save the file and close. Reload the systemd daemon to apply new changes.

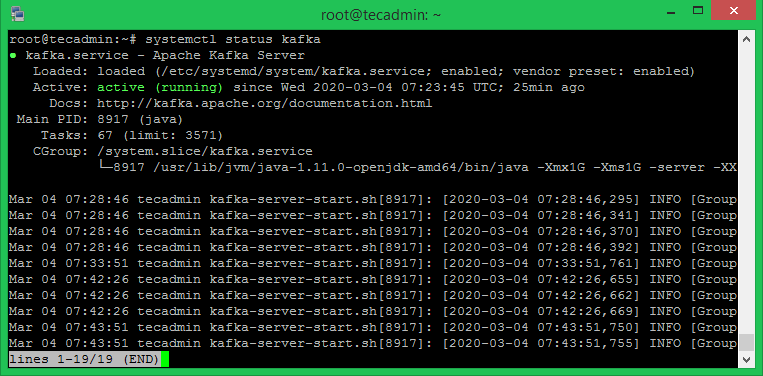

Step 4 – Start Kafka Server

Kafka required ZooKeeper so first, start a ZooKeeper server on your system. You can use the script available with Kafka to get start a single-node ZooKeeper instance. Now start the Kafka server and view the running status:

All done. The Kafka installation has been successfully completed. This part of this tutorial will help you to work with the Kafka server.

Step 5 – Create a Topic in Kafka

Kafka provides multiple pre-built shell script to work on it. First, create a topic named “testTopic” with a single partition with single replica: The replication factor describes how many copies of data will be created. As we are running with a single instance keep this value 1. Set the partition options as the number of brokers you want your data to be split between. As we are running with a single broker keep this value 1. You can create multiple topics by running the same command as above. After that, you can see the created topics on Kafka by the running below command: Alternatively, instead of manually creating topics you can also configure your brokers to auto-create topics when a non-existent topic is published to.

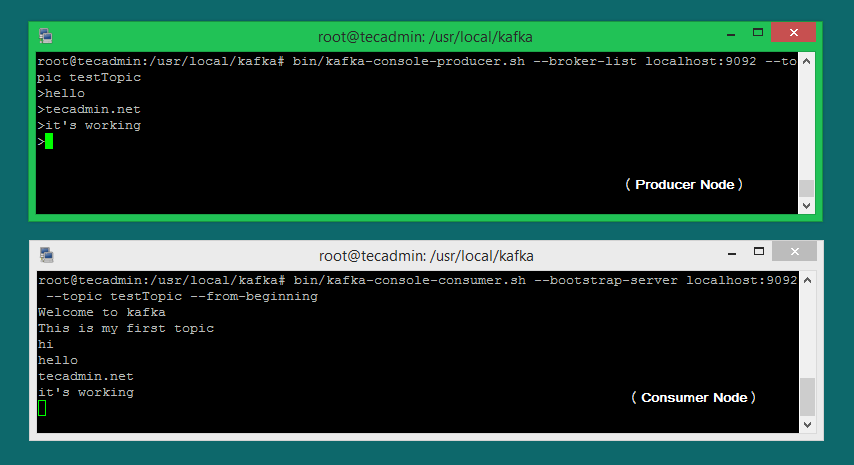

Step 6 – Send Messages to Kafka

The “producer” is the process responsible for put data into our Kafka. The Kafka comes with a command-line client that will take input from a file or from standard input and send it out as messages to the Kafka cluster. The default Kafka sends each line as a separate message. Let’s run the producer and then type a few messages into the console to send to the server. You can exit this command or keep this terminal running for further testing. Now open a new terminal to the Kafka consumer process on the next step.

Step 7 – Using Kafka Consumer

Kafka also has a command-line consumer to read data from the Kafka cluster and display messages to standard output. Now, If you have still running Kafka producer (Step #6) in another terminal. Just type some text on that producer terminal. it will immediately visible on consumer terminal. See the below screenshot of Kafka producer and consumer in working:

Conclusion

You have successfully installed and configured the Kafka service on your Ubuntu Linux machine.