Project Objective

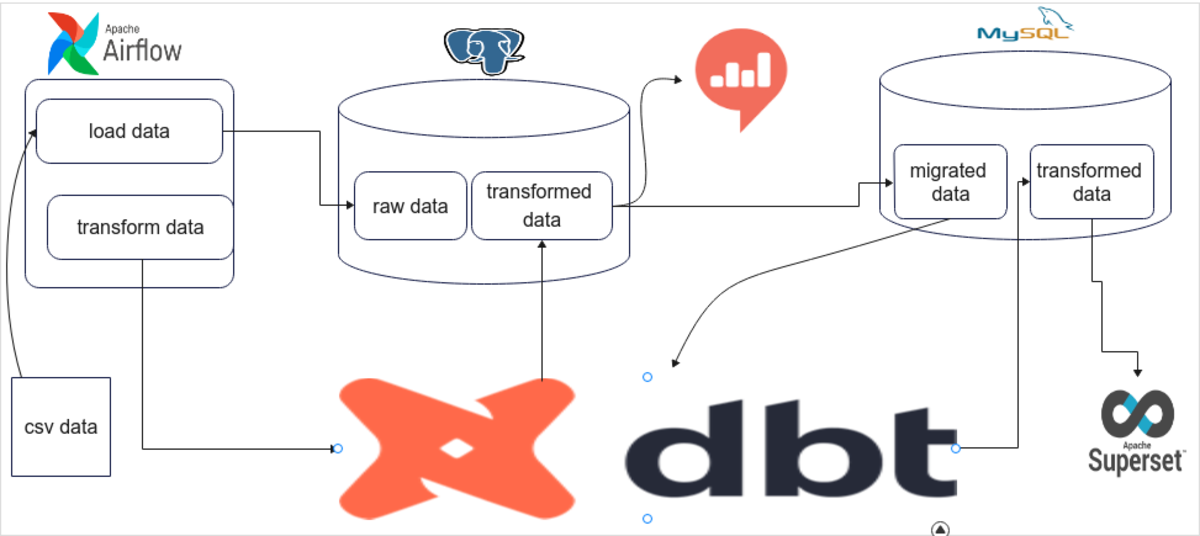

The objective of this project lies in understanding and applying changes and automation with regard to ELT Pipeline and data warehouse. In the previous project, we built a data warehouse using PostgreSQL, Airflow, DBT, and Redash. This time around, we aim to create and manage the automation process of the data warehouse as data migrates into Mysql, Airflow, DBT, and Apache superset. This project would be helpful to data engineers or anyone performing data migration, changes, and automation processes.

Tools Used

Apache Airflow — A workflow manager to schedule, orchestrate and monitor workflows. Directed acyclic graphs (DAG) are used by Airflow to control workflow orchestration. Postgresql — An object-relational database management system (ORDBMS) with an emphasis on extensibility and standards compliance. This is the database which we will be migrating data from. DBT (data build tool) — Enables transforming data in warehouses by simply writing select statements. It handles turning these select statements into tables and views. Redash — An open-source web application used for clearing databases and visualizing the results. This is the dashboard builder tool we will be migrating from. Apache Superset — An open-source data visualization and data exploration platform. It is fast, lightweight, intuitive, and loaded with options that make it easy for users of all skill sets to explore and visualize their data, from simple line charts to highly detailed geospatial charts.

Used Data

The data we will be using for this project can be downloaded from pNEUMA data. pNEUMA is an open large-scale dataset of naturalistic trajectories from half a million vehicles in the congested downtown area of Athens, Greece. The one-of-a-kind experiment used a swarm of drones to collect the data. Each file for a single (area, date, time) is ~87MB of data. This data have been stored in the PostgreSQL database ( Look it up in my last article ).

Project Phases

In this project, we will be using PostgreSQL as our old database and migrating it into MySQL database. Then we will enable transformation using DBT. Last but not least, we will build a dashboard.

Writing Airflow Dags

At this phase, we already have the data, so the main part of the project—migrating from a database to a different database—can become difficult. But here I will show how I was able to migrate my data from Postgres to MySQL. This code will enable us to get all schema names with their table name from a database name parameter.

Migration Utility Codes

This code will enable us to create our database and table dynamically. This is one of the important functions because they are usually the first steps in data migration. The “get_schema_and_table_name” function will enable us to get all schema names with their table name from a database name parameter.

Data Migration Orchestrator Codes

Those are probably the most important code in our project as they are the ones orchestrating and calling every function. The “create schemas_and_load_data” function handles the process after fetching the schema and table names from PostgreSQL. Its process summary would be as follows:

Airflow Dags to Call the Above Functions

Privilege Migration Codes

At this stage, we have migrated all the data from PostgreSQL but user privileges are left to be migrated, as migrating only the data is not enough. So on this function, the steps, in summary, would be:

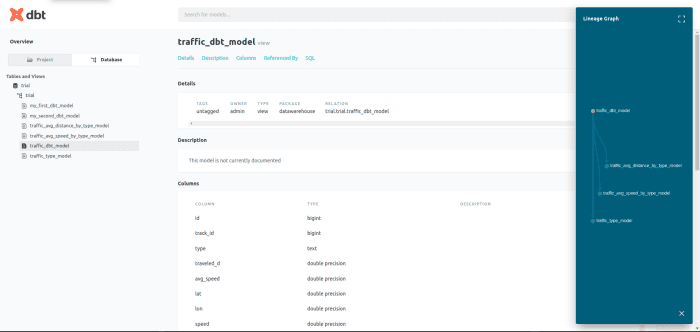

DBT Codes for MYSQL

The DBT codes here are not much different from the PostgreSQL DBT codes. Here is a little DBT code for MySQL:

schema.yml

traffic_dbt_model.sql

traffic_avg_speed_by_type_model.sql

Those DBT codes would create multiple views transforming the data. After this DBT would enable us to document our models and serve them locally.

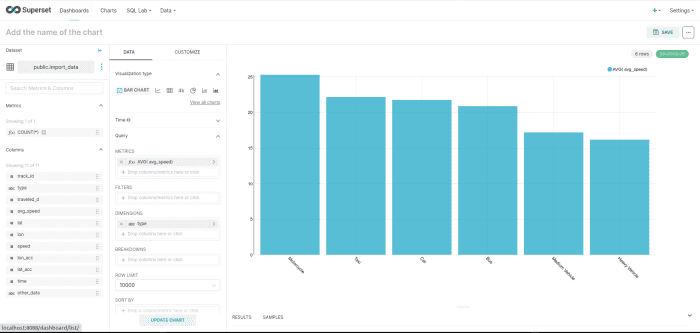

Building a Dashboard Using Apache Superset

Apache Superset really a powerful tool that enables building dashboards with visualization user interface and also from SQL queries. Here are some of the charts I was able to create.

Project Challenges and Takeaways

This project has been helpful in understanding the data migration process and which challenges we might face in real-world data migration projects. Plus, we were able to understand the structure of PostgreSQL and MySQL databases and privilege models. Some pointers include:

While working on this project I have observed and researched to find a module or application that enables to migrate data from PostgreSQL to MySQL. But all I could find was MySQL to Postgres, which means more people and companies are moving toward Postgres not MySQL. One reason could be scalability. Inside PostgreSQL, one can store multiple schemas and each has the ability to store multiple tables while in MySQL a schema is the database, which means a database can not have multiple schemas. This makes it difficult if there are two databases with the same schema or two schemas with the same table names. User privilege structure in PostgreSQL has more data than MySQL, which includes the information about who granted which access to a user. I found Apache Superset to be easy to use and have an easy installation process compared with Redash. PostgreSQL has more data type support than MySQL, but MySQL has sub datatype that is not available in PostgreSQL, which makes it difficult to convert data types between the databases.

Future Plans and Conclusion

This project has enabled me to look deep into database architectures and models in databases like PostgreSQL and MySQL. It also allowed me to compare dashboard building tools, such as Redash and Superset. Key future works include:

Data Warehouse Tech Stack MigrationContribute to tesfayealex/Data-Warehouse-techstack-migration development by creating an account on GitHub.

This content is accurate and true to the best of the author’s knowledge and is not meant to substitute for formal and individualized advice from a qualified professional.